Evaluation questions

You probably have some idea of why you are conducting an evaluation. For instance, evaluations can directly affect future actions or decisions or change someone’s attitude or way of thinking.1 Evaluation questions transform a vague idea into specific elements that are tied to one or more outcomes and can be measured through assessments.

All evaluations answer the same three fundamental questions, according to the CAISE Principal Investigator’s Guide.

- What? (What happened or happens and what are the results?)

- So what? (Why did the results occur the way they did, and what are the implications?)

- Now what? (What actions, decisions, or recommendations can be made based on the results?)

Do’s and Don’ts of evaluation questions

- DON’T ask more questions than you have time or resources to answer

-

DO prioritize questions based on the value of their answers and your ability to answer them

- DON’T come up with all the questions yourself

-

DO talk to stakeholders for their input

- DON’T try to answer all questions from every stakeholder

-

DO use stakeholder input to inform the evaluation design, not determine it

- DON’T ask questions that are too broad or aspirational

- DO ask questions that can be answered realistically

Types of evaluations

Front-end evaluation

Front-end evaluation occurs in the Identifying stage of a library program or service. It is similar to user research and is used to inform the initial design of a program.2

Examples:

- What aspects of this topic are our youth interested in?

- What is our audience’s current skill level?

Formative evaluation

Formative evaluation occurs in the Reflecting stage of a library program or service and consists of periodic evaluations conducted during the development and implementation of a program. It is used for course-correcting and making changes if necessary. Formative evaluation can look at how a project is progressing towards its goals and whether the implementation of the project going according to plan.3

Examples:

- Are participants learning what we expected?

- Are there any issues with implementation of the program?

Summative evaluation

Summative evaluation occurs in the Documenting stage of a library program or service and focuses on conditions at the end of the program. It is an evaluation of the entire process, and it may report results from earlier formative evaluations as well.

Examples:

- Did participants learn what and as much as we wanted?

- What was the value of the partnership?

Using assessments in evaluations

Assessments used in evaluations are not much different from the standalone assessments discussed earlier. Since evaluations ask deep and complex questions, however, they typically involve multiple assessments to help build a better picture and construct stronger evidence.

Indicators

Assessments used in evaluations need to be tied directly to the outcome or outcomes you are evaluating. You need to choose indicators that will provide measurable ways to show the impact of your initiative in relation to that outcome. To determine the appropriate indicators for your project, look at one of your outcomes and ask “How will I know it when I see it?”4

Just as the outcomes of connected learning are often nontraditional, so are the indicators. Using a variety of indicators to answer a single question can help triangulate your interpretations. (The same indicator can also be used to answer multiple questions sometimes.) Traditional library assessment measures (number of attendees, number of questions asked, how often a resource is used, patron satisfaction) play an important role and can be part of your evaluation. However, in a connected learning evaluation, they should exist in support of other measures.

Don’t start with indicators!

Don’t start with indicators! Start by determining your desired outcomes. If you start with indicators, you run the risk of defaulting to only the easiest and most obvious measures.5 The outcomes of connected learning can be much richer than that. Start with the outcomes, and then figure out the best way to measure your progress towards those outcomes.

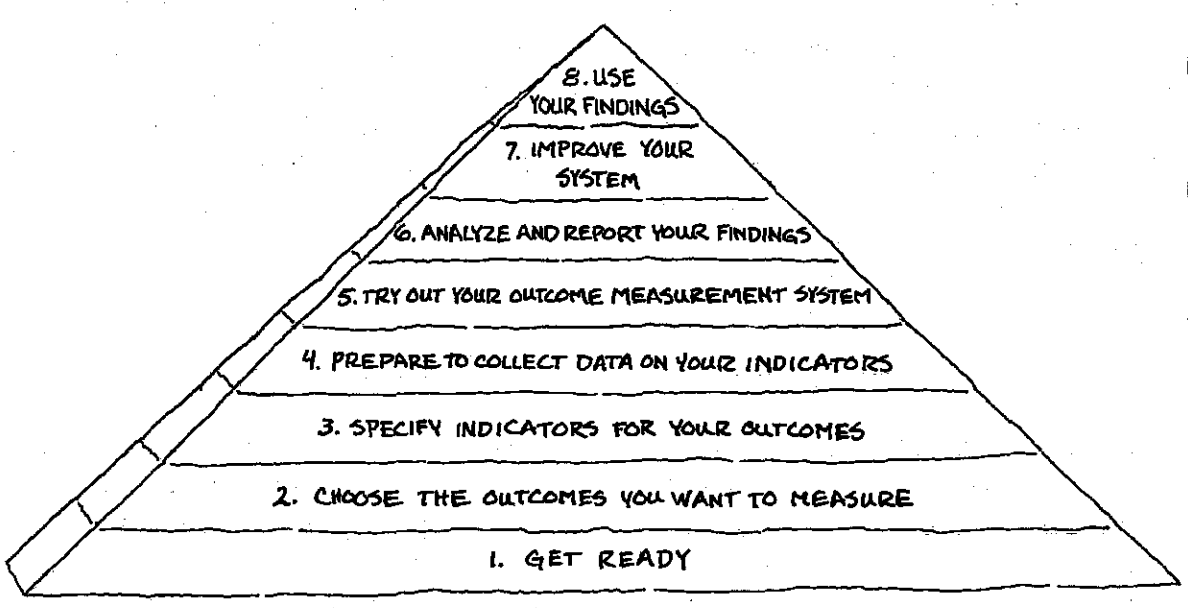

United Way has identified eight “steps to success” in measuring program outcomes.

Source:

Measuring Program Outcomes: A Practical Approach

- Get ready

- Choose the outcomes you want to measure

- Specify indicators for your outcomes

- Prepare to collect data on your indicators

- Try out your outcome measurement system

- Analyze and report your findings

- Improve your system

- Use your findings

References

1: Evaluation Use, by Marvin C. Alkin, in the Encyclopedia of Evaluation.

2: Principal Investigator’s Guide: Managing Evaluation in Informal STEM Education Projects, by R. Bonney, K. Ellenbogen, L. Goodyear, & R. Hellenga, p. 13, 16, 61. Center for Advancement of Informal Science Education, 2001.

3: The 2010 User-Friendly Handbook for Project Evaluation, pp. 8-9, by J. F. Westat. National Science Foundation, 2010.

4: Principal Investigator’s Guide: Managing Evaluation in Informal STEM Education Projects, by R. Bonney, K. Ellenbogen, L. Goodyear, & R. Hellenga, p. 51. Center for Advancement of Informal Science Education, 2001.

5: W. K. Kellogg Foundation Evaluation Handbook, p. 33.